September 25, 2025

How to transform financial transaction data with machine learning

If you’ve ever looked at raw financial transaction data, you know it’s a mess. One merchant can show up hundreds of different ways – crammed with payment prefixes, timestamps, legal terms, and all kinds of extra noise.

This mess makes it hard for banks and fintechs to deliver what customers expect today: intuitive, transparent experiences, real-time insights, and meaningful engagement based on actual behaviour.

That’s why an approach that combines financial transaction data and machine learning is increasingly important.

At Snowdrop, our team has been working on tackling this challenge head-on – with a domain-specific Natural Language Processing (NLP) solution purpose-built to clean up financial transaction strings and turn them into structured, actionable data.

The challenges of messy financial transaction data

Let’s take a look at what raw merchant data really looks like:

- FCB AG Ticketing, Muenchen, DE

- PULLBEAR 6505 CENTRUM.G, DRESDEN, DE

- Gillet Tanken Waschen, Landau in der, DE

It’s hard for traditional systems to tell the difference between what’s useful (the actual brand) and what’s just noise (like “Ticketing”, “Centrum”, or “AG”). And forget about automated classification – unless you’re using models trained specifically on financial data with the support of machine learning innovations.

Generic NLP can’t handle this. And without a strong understanding of industry-specific terminology and regional context, any attempt at enrichment or contextualisation falls flat.

So, what did we build?

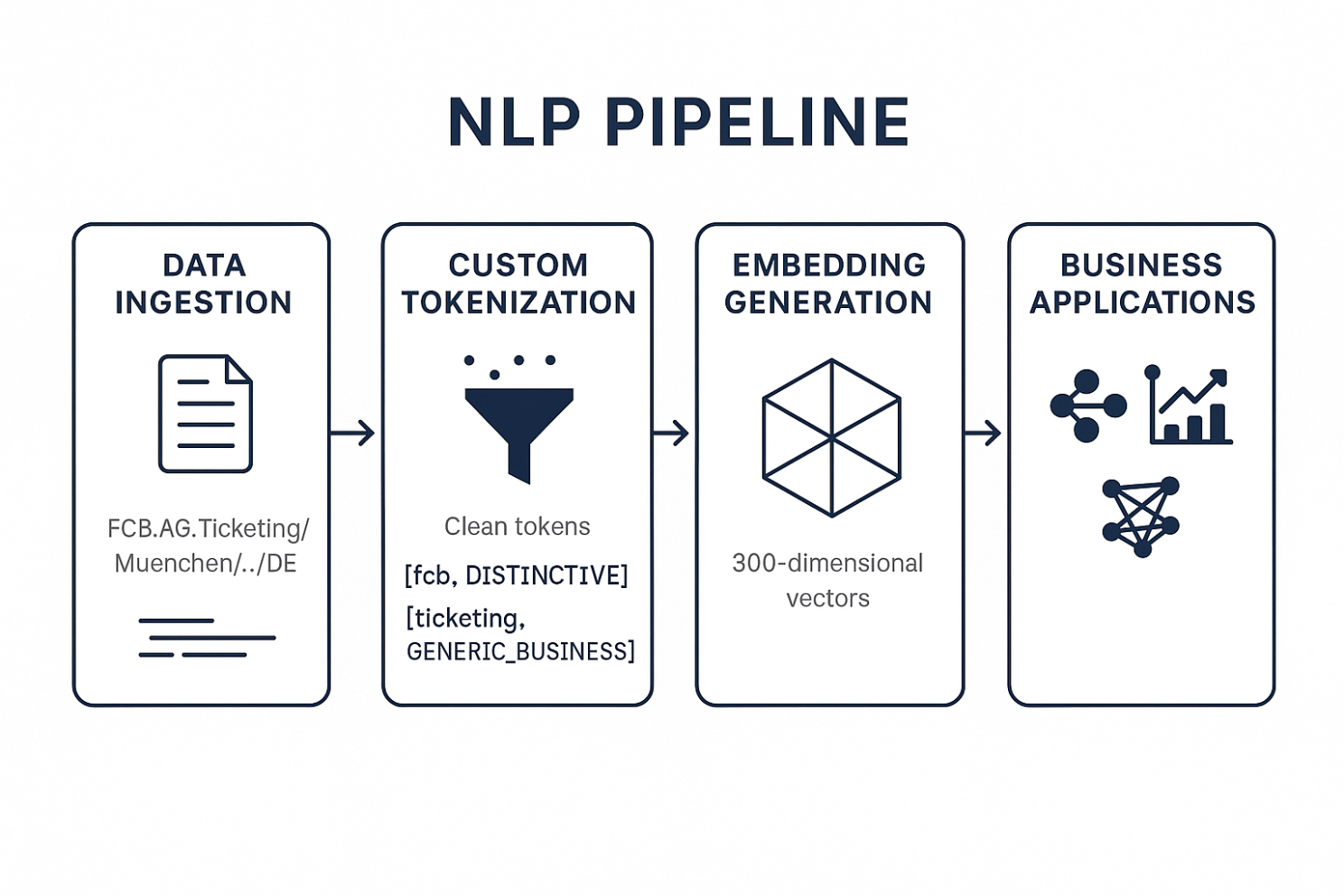

Instead of trying to force general-purpose AI models into a financial context, we built something from the ground up: a lightweight, high-precision, NLP pipeline tailored to financial data that leverages the power of machine learning.

Let’s see how it works:

- Custom Tokenizer trained on millions of real transactions

- PMI (Pointwise Mutual Information) to detect brand-relevant multi-word terms

- FastText subword embeddings to boost recognition of rare or misspelled brand names

- Domain dictionaries that classify legal suffixes, geographic indicators, and generic business terms

As a result, our system automatically picks up on what matters – the brand identity – and filters out everything else.

What makes it smart? Machine learning in action

Every transaction string runs through several stages:

- Cleanup and normalisation

Strips out payment metadata like “KARTE 4012…”, timestamps, random punctuation. - Sequence detection

Combines multi-word merchant names into clean, unified tokens (e.g., erlebnis_akademie). - Classification

Flags tokens as distinctive or generic based on how rare or common they are in our database. - Vector embedding

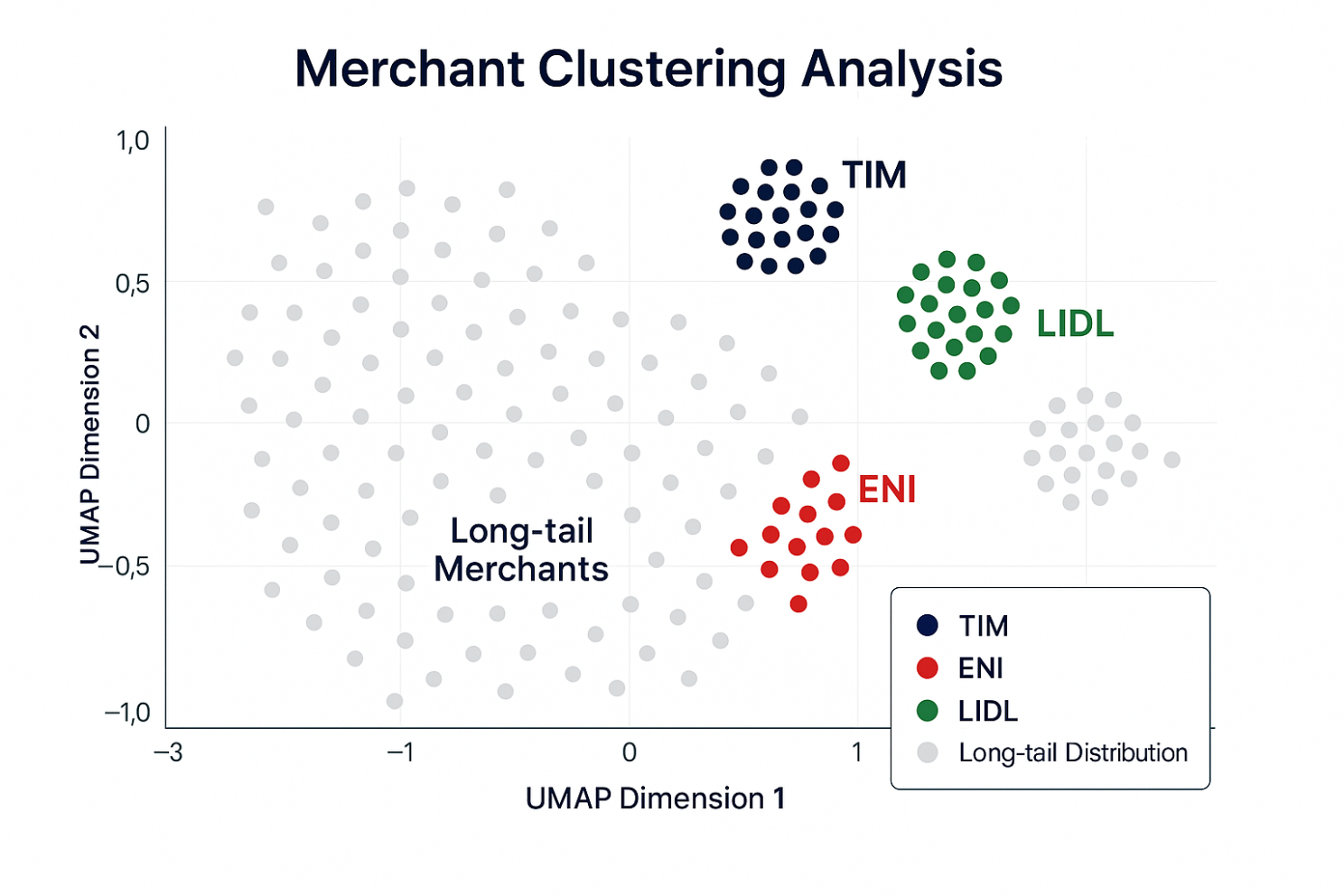

Every enriched merchant gets converted into a precise 300-dimensional vector — perfect for plugging into machine learning models, clustering, or any kind of downstream analytics.

In short, we turn a transaction mess into a structured merchant profile – clean enough to drive real-time personalisation, behavioural segmentation, and automated categorisation at scale.

How does it impact the banks?

Whether you’re trying to reduce manual review time or roll out the next AI-driven product experience, having clean, enriched financial transaction data makes a massive difference.

Here’s what we’ve delivered:

➡️ +28% increase in transactions automatically mapped to the right brand

➡️ Significant reduction in manual work – less friction, faster insights

➡️ Clean merchant signals that boost accuracy for downstream analytics, loyalty engines, and personalisation layers

In other words, we built a platform that helps you:

✅ Recognise merchants more accurately

✅ Categorise spending automatically

✅ Segment users based on real behaviour

✅ Feed cleaner data into your AI-driven engagement tools

The takeaway: Precision wins

Generic AI models might get you part of the way, but they miss the nuance. Our approach is simple: start with the right data structure, then layer in domain expertise and machine learning. This allows us to make sense of financial transaction data and drive smarter engagement and better outcomes.

We believe that domain-specific, real-time data enrichment is the key to unlocking the next wave of innovation in banking, fintech, and loyalty with foundational support from financial data machine learning.

If you’re building the future of financial experiences, you need data that’s clean, contextual, and actionable – right out of the box.

Chief Strategy Officer

Experienced CPO and CMO leader with drive, passion and a results-oriented approach to achieving the strategic vision. Extensive experience energising and motivating teams across all areas of product management, product marketing and corporate marketing.